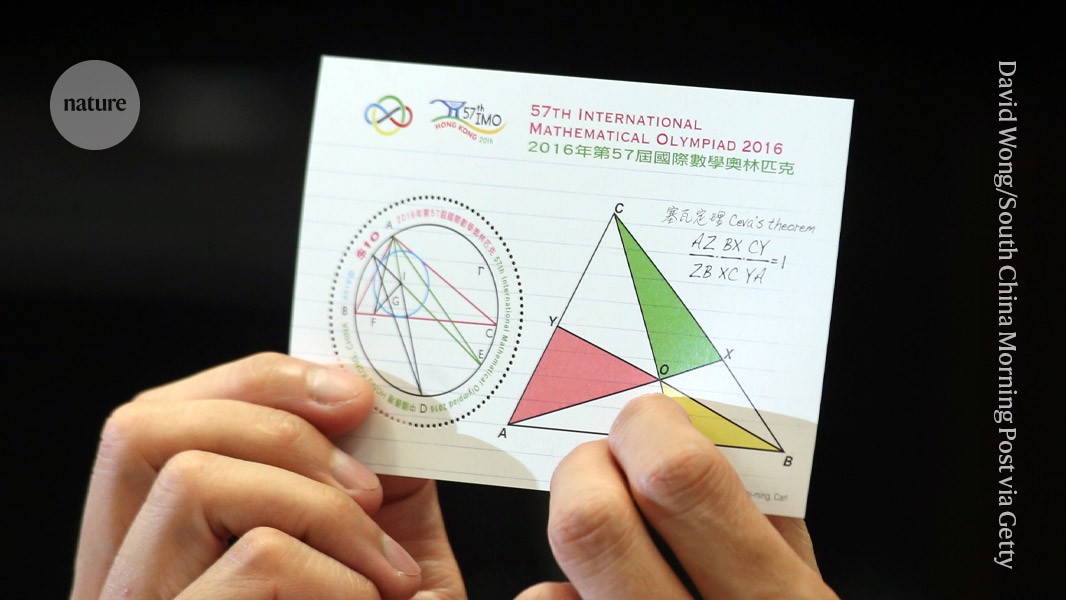

Problems set at the International Mathematical Olympiad are from several areas of mathematics.Credit: David Wong/South China Morning Post via Getty

After beating humans at everything from the game of Go to strategy board games, Google DeepMind now says it is on the verge of besting the world’s top students at solving mathematics problems.

The London-based machine-learning company announced on 25 July that its artificial intelligence (AI) systems had solved four of the six problems that were given to school students at the 2024 International Mathematical Olympiad (IMO) in Bath, UK, this month. The AI produced rigorous, step-by-step proofs that were marked by two top mathematicians and earned a score of 28/42 — just one point shy of the gold-medal range.

DeepMind AI solves geometry problems at star-student level

“It’s clearly a very substantial advance,” says Joseph Myers, a mathematician based in Cambridge, UK, who — together with Fields Medal-winner Tim Gowers — vetted the solutions and who had helped select the original problems for this year’s IMO.

DeepMind and other companies are in a race to eventually have machines give proofs that would solve substantial research questions in maths. Problems set at the IMO — the world’s premier competition for young mathematicians — have become a benchmark for progress towards that goal, and have come to be seen as a “grand challenge” for machine learning, the company says.

“This is the first time any AI system has been able to achieve medal-level performance”, said Pushmeet Kohli, vice-president of AI for science at DeepMind, in a briefing to reporters. “This is a key milestone in the journey of building advanced theorem provers,” said Kohli.

Branching out

Only months ago, in January, the DeepMind system AlphaGeometry had achieved medallist-level performance at solving one type of IMO problem, those in Euclidean geometry. The first AI that performs at gold-medal level for the overall test — including questions in algebra, combinatorics and number theory, which are generally considered more challenging than geometry — will be eligible for a US$5 million award called the AI Mathematical Olympiad (AIMO) Prize. (The prize has strict criteria such as making code open-source and working with limited computing power, which means that DeepMind’s current efforts would not qualify.)

In their latest effort, the researchers used AlphaGeometry2 to solve the geometry problem in under 20 seconds; the AI is an improved and faster version of their record-setting system, says DeepMind computer scientist Thang Luong.

For the other types of questions, the team developed an entirely new system called AlphaProof. AlphaProof solved the competition’s two algebra problems, plus one in number theory, something that took it three days. (Participants in the actual IMO are given two sessions of 4.5 hours each.) It was not able to solve the two problems in combinatorics, another area of mathematics.

The Mathematical Olympiad is the world’s premier contest for school-aged maths whizzes.Credit: MoiraM/Alamy

Researchers have achieved mixed results when trying to answer mathematical questions with language models — the type of system that powers chatbots such as ChatGPT. Sometimes, the models give the correct answer but are not able to rationally explain their reasoning, and sometimes they spew out nonsense.

Just last week, a team of researchers from software companies Numina and HuggingFace used a language model to win an intermediate AIMO ‘progress prize’ based on simplified versions of IMO problems. The companies made their entire systems open-source and available for other researchers to download. But the winners told Nature that to solve harder problems, language models alone would probably not be enough.

A-grade solver

AlphaProof combines a language model with the technique of reinforcement learning, using the ‘AlphaZero’ engine the company has employed successfully for attacking games such as Go as well as some specific mathematical problems. In reinforcement learning, a neural network learns by trial-and-error. This works well when its answers can be evaluated by some objective metric. For that purpose, AlphaProof was trained to read and write proofs in a formal language called Lean, which is used in ‘proof assistant’ software package of the same name popular with mathematicians. To do so, AlphaProof tested whether its outputs were correct by running them in the Lean package, which helped to fill in some of the steps in the code.

Training any language model requires massive amounts of data, but few mathematical proofs were available in Lean. To overcome this problem, the team designed an additional network’ that attempted to translate an existing record of a million problems written in natural language into Lean — but without including human-written solutions, says Thomas Hubert, a DeepMind machine-learning researcher who co-led the development of AlphaProof. “Our approach was, can we learn to prove, even if we originally didn’t train on human-written proofs?” (The company had a similar approach with Go, where its AI learned to play the game by playing against itself, rather than from the way humans do.)

Magic keys

Many of the Lean translations were nonsensical, but enough were good enough to get AlphaProof to the point that it could start its reinforcement-learning cycles. The results were much better than expectations, Gowers said at the press briefing. “Many of the problems in the IMO have this magic-key property. The problem looks hard at first until you find a magic key that unlocks it,” said Gowers, who is at the Collège de France in Paris.

In some cases, AlphaProof seemed able to provide that extra leap of creativity, providing a correct step out of an infinitely large range of possibilities. But it will take further analysis to establish whether the answers were less surprising than they looked, Gowers added. A similar debate ensued following the surprising ‘move 37’ taken by DeepMind’s AlphaGo bot in its famed 2016 defeat of the world’s top human Go player — a watershed for AI.

Whether the techniques can be perfected to the point of doing research-level work in mathematics remains to be seen, Myers said in the press briefing. “Can it extend to other sorts of mathematics where there might not be a million problems to train on?”

“We’re at the point where they can prove not open research problems, but at least problems that are very challenging to the very best young mathematicians in the world,” said DeepMind computer scientist David Silver, who in the mid-2010s was the leading researcher in developing AlphaGo.