Fake images generated by artificial intelligence have proliferated so quickly that they’re now nearly as common as those manipulated by text or traditional editing tools like Photoshop, according to researchers at Google and several fact-checking organizations.

The findings offer an indication of just how quickly the technology has been embraced by people seeking to spread false information. But researchers warned that AI is still just one way in which pictures are used to mislead the public — the most common continues to be real images taken out of context.

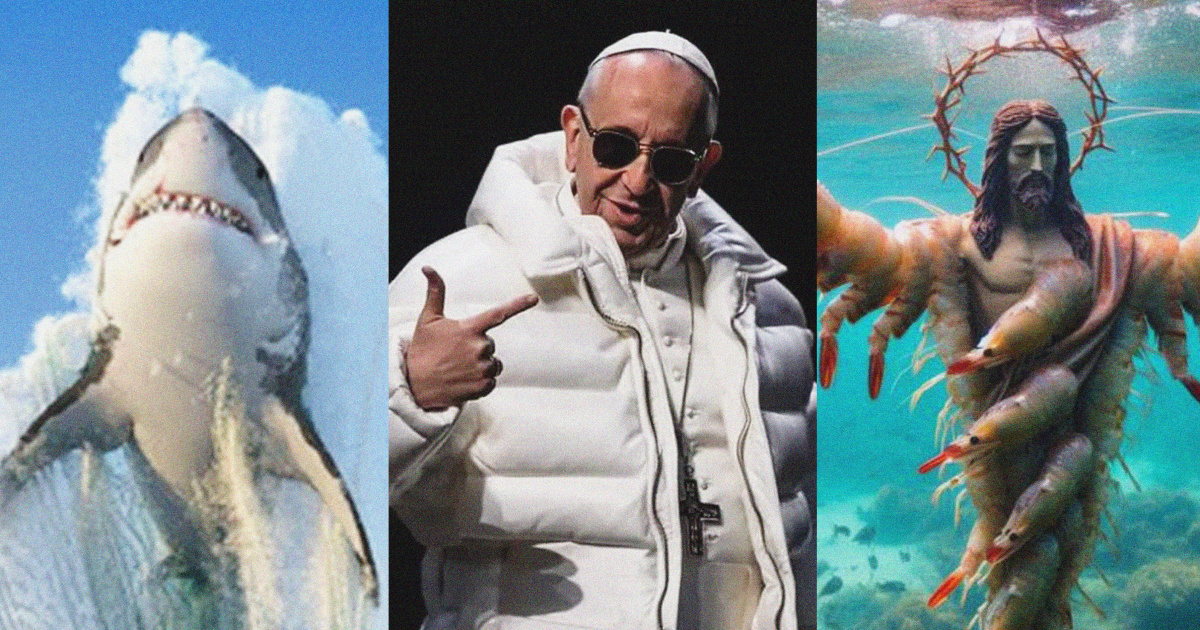

In a paper released online this month but not yet peer-reviewed, the researchers tracked misinformation trends by analyzing nearly 136,000 fact-checks dating back to 1995, with the majority of them published after 2016 and ending in November 2023. They found that AI accounted for very little image-based misinformation until spring of 2023, right around when fake photos of Pope Francis in a puffer coat went viral.

“The sudden prominence of AI-generated content in fact checked misinformation claims suggests a rapidly changing landscape,” the researchers wrote.

The lead researchers and representatives for Google did not comment in time for publication.

Alexios Mantzarlis, who first flagged and reviewed the latest research in his newsletter, Faked Up, said the democratization of generative AI tools has made it easy for almost anyone to spread false information online.

“We go through waves of technological advancements that shock us in their capacity to manipulate and alter reality, and we are going through one now,” said Mantzarlis, who is the director of the Security, Trust, and Safety Initiative at Cornell Tech, Cornell University’s graduate campus in New York City. “The question is, how quickly can we adapt? And then, what safeguards can we put in place to avoid their harms?”

We go through waves of technological advancements that shock us in their capacity to manipulate and alter reality, and we are going through one now -Alexios Mantzarlis, director of the Security, Trust, and Safety Initiative at Cornell Tech

The researchers found that about 80% of fact-checked misinformation claims involve media such as images and video, with video increasingly dominating those claims since 2022.

Even with AI, the study found that real images paired with false claims about what they depict or imply continue to spread without the need for AI or even photo-editing.

“While AI-generated images did not cause content manipulations to overtake context manipulations, our data collection ended in late 2023 and this may have changed since,” the researchers wrote. “Regardless, generative-AI images are now a sizable fraction of all misinformation-associated images.”

Text is also a component in about 80% of all image-based misinformation, most commonly seen in screenshots.

“We were surprised to note that such cases comprise the majority of context manipulations,” the paper stated. “These images are highly shareable on social media platforms, as they don’t require that the individual sharing them replicate the false context claim themselves: they’re embedded in the image.”

Cayce Myers, a public relations professor and graduate studies director at Virginia Tech’s School of Communication, said context manipulations can be even harder to detect than AI-generated images because they already look authentic.

“In that sense, that’s a much more insidious problem,” Myers, who reviewed the recent findings prior to being interviewed, said. “Because if you have, let’s say, a totally AI-generated image that someone can look at and say, ‘That doesn’t look quite right,’ that’s a lot different than seeing an actual image that is captioned in a way that is misrepresenting what the image is of.”

Even AI-based misinformation, however, is quickly growing harder to detect as technology advances. Myers said traditional hallmarks of an AI-generated image — abnormalities such as misshapen hands, garbled text or a dog with five legs — have diminished “tremendously” since these tools first became widespread.

Earlier this month, during the Met Gala, two viral AI-generated images of Katy Perry (who wasn’t at the event) looked so realistic at first glance that even her mom mistakenly thought the singer was in attendance.

And while the study stated AI models aren’t typically trained to generate images like screenshots and memes, it’s possible they will quickly learn to reliably produce those types of images as new iterations of advanced language models continue to roll out.

To reliably distinguish misinformation as AI tools grow more sophisticated, Mantzarlis said people will have to learn to question the content’s source or distributor rather than the visuals themselves.

“The content alone is no longer going to be sufficient for us to make an assessment of truthfulness of trustworthiness veracity,” Mantzarlis said. “I think you need to have the full context: Who shared it with you? How was it shared? How do you know it was them?”

But the study noted that relying solely on fact-checked claims doesn’t capture the whole scope of misinformation out there, as it’s often the images that go viral that end up being fact checked. It also relied only on misinformation claims made in English. This leaves out many lesser-viewed or non-English pieces of misinformation that float unchecked in the wild.

Still, Mantzarlis said he believes the study reflects a “good sample” of English-language misinformation cases online, particularly those that have reached a substantial enough audience for fact-checkers to take notice.

For Myers, the bigger limitation affecting any study on disinformation — especially in the age of AI — will be the fast-changing nature of the disinformation itself.

“The problem for people who are looking at how to get a handle on disinformation is that it’s an evolving technological reality,” Myers said. “And capturing that is difficult, because what you study in May of 2024 may already be a very different reality in June of 2024.”